Robots.txt website pe sab se simplest files me se ek hai, iske sath hi ye un files me se bhi ek hai jaha pe hum sab se aasani ke sath kuch bhi garbari kar sakte hai. Yahan pe aap agar ek character ko bhi apni jagah se bejagah kar dete hai to ye aapke SEO ko destroy kar sakta hai, aur search engine ko aapke site ke important content ko access karne se rok sakta hai. Is article me hum aapko batayenge ke Robots.txt kya hai, Robots.txt kyu important hai?

Robots.txt kya hai?

Ye ek aisa file hai jo search engine spider ko batata hai ke use kisi website ke kis pages ya section ko crawl nahi karna hai. Zyadatar search engine (Google, Bing aur Yahoo) Robots.txt requests ko pahchahnte hai aur use accept bhi karte hai.

Robots.txt file ko webmasters web robots (search engine robots) ko instruct karne ke liye create karte hai ke wo unke website ke pages ko kaise crawl kare.

Practically robots.txt files indicate karta hai ke certain user agents (web-crawling software) website ke kin kin parts crawl kar sakta hai aur kin parts ko nahi.

Robots.txt Important Kyu Hai?

Bahut sari sites aise hoti hai jinhe robots.txt file ki zaroorat nahi hoti hai.

Aisa isliye hai ke Google apke site ke sabhi important pages ko find aur index kar sakta hai. Aur wo automatically un pages ko index nahi karta hai jo ya to important na ho ya kisis dusre pages ka duplicate version ho.

3 aise main reasons hai jinki wajah se aap robot.txt file ka use karna chahte hai.

Block Non-Public Pages: kabhi kabhi hamare site pe aise kuch pages hote hai jinhe hum index karna nahi chate hai. Example ke liye aapke page ka staging version ya login page. In pages ko aapko rakhna zaroori hai, par aap nahi chahenge ke log ispe koi randopeople land kare.

Aur isis tarah ke cases ke liye aapko robot.txt file ki zaroorat hai, taki aap search engine crawler ko apne in pges ko crawl karne se rok sake.

Maximize Crawl Budget: Agar aapko apne sabhi pages ko index karne me problem aa rahi hai to aapko crawl budget ki problem ho sakti hai. Unimportant pages ko robot.txt ki help se block kar ke, Googlebot aapke crawl budget ke un pages pe zyada spend kar sakta hai jo actuall important ho.

Prevent Indexing of Resources: Robots.tx ki tarah meta directives bhi aapko pages ko index karne se prevent karta hai. Halaki meta directives multimedia resources, jaise PDFs aur images ke sath kaam nahi karta hai. Aur yahi wo jagah jub robots.txt ki zaroorat hoti hai.

Robots.tx search engine ko aapki site ke kisi specific pages ko crawl karne se rokta hai. Aap search console me dekh sakte hai ke aapke kitne pages indexed hai.

Agar ye number us pages ke number se match karta hai jinhe aap index karna chahte hai, to aapko Robots.tx file ke liye bother hone ki zaroorat nahi hai.

Aur agar ye number aapke expected pages ke number se zyada hai to aapko apne website ke liye Robots.tx file ko create karne ki zaroorat hai.

Best Practices

Ab tak hum jaan chuke hai ke Robots.txt file kya hota hai aur ye important kyu hai. Ab hum aapko kuch aise best practices ke bare me batayenge jinhe aapko follow karna hai.

Also Read: 10 Most Important Parts of SEO

Create a Robots.txt File

Aapka pahla step robots.txt file create karna hai.

Aap apna robots.txt file kisi bhi plain text editor ka use kar ke bana sakte hai.

Agar aapke pass pahle se robots.txt file hai to aap uske text ko delete kar de. Aapko sabse pahle user-agent term ko setup karna hoga.

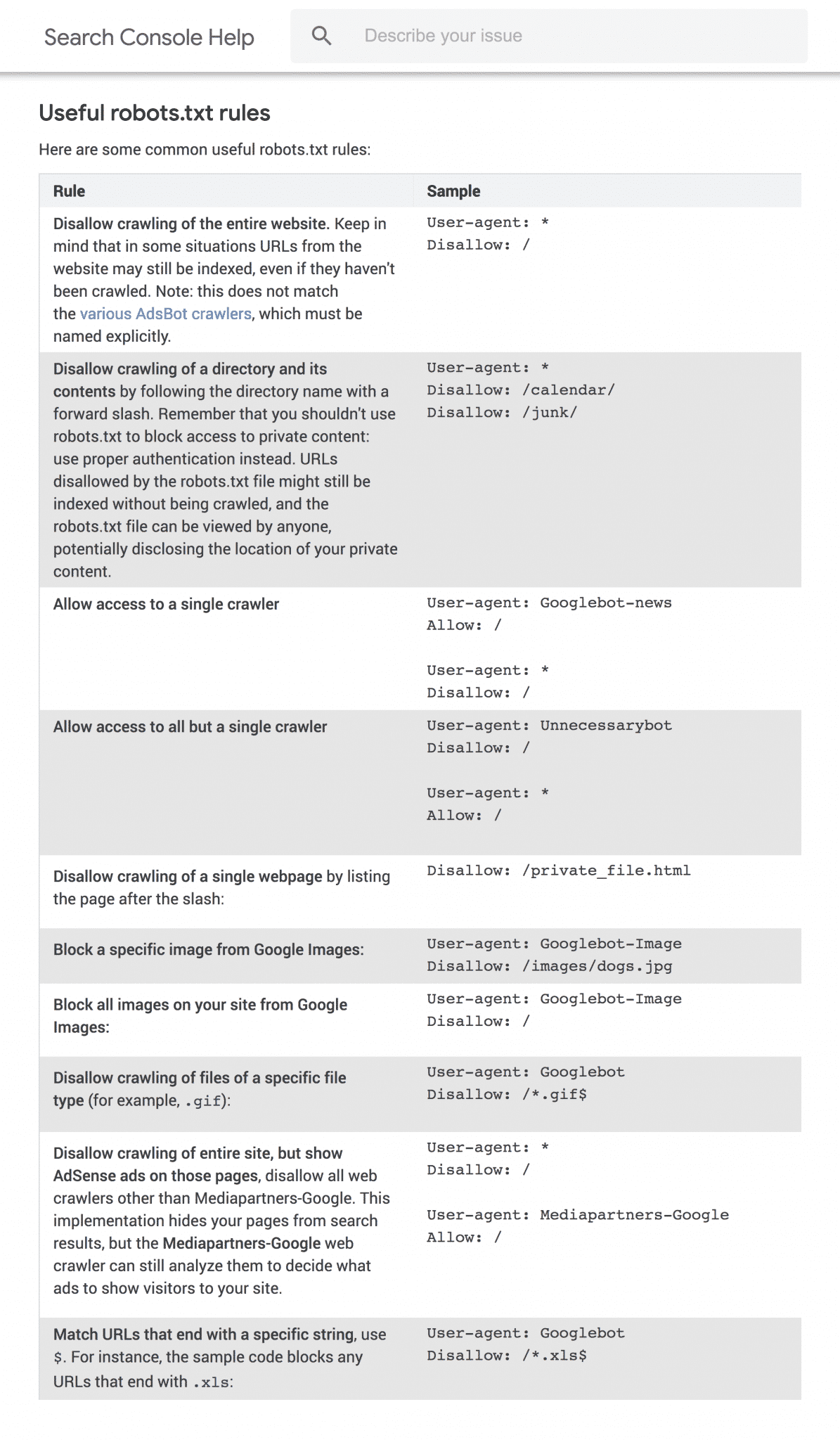

Sabs pahle aapko robots.txt file me use kiye gaye kuch terms ko samajhna hoga, in terms ke liye aap Google ke define kiye gaye in terms ko dekh sakte hai.

Ab aapko ek simple robot.txt file ko setup karna hai.

User-agent: X

Disallow: Y

User-agent wo specific bot jo ise follow karega. Aur “disallow” ke baad aane wale sabhi chize aise pages ya sections hai jise aap block karna chahte hai.

Example:

User-agent: googlebot

Disallow: /images

Ye rule Googlebot ko aapke website ke us image folder ko index karnese mana karega.

Agar aap aisa chahte hai ke sabhi web robots ise follow kare to aap asterisk (*) ka use kar sakte hai.

Example:

User-agent: *

Disallow: /images

Asterisk (*) sabhi spider ko aapke images folder ko crawl karne se rok dega.

Ye robots.txt file ko use karne ke kayi tareeqo me se ek hai.

Robots.txt File Ko Easy to Find Banaye

Apne root.txt file ko banane ke baapko ise live karna hoga.

Aap technically robots.txt file ko apne site ke main directory me kahi pe bhi place kar sakte hai. Lekin hamara recommendation hai ke aap ise is yaha place kare:

https://example.com/robots.txt

Aapka robots.txt file case sensetive hai isliye aapko apne file name me lowercase “r” ka use kare.

Errors Aur Mistakes Ko Check Kare

Aapke robots.txt file ka correctly setup hona bahut zaroori hia. Agar aap yaha pe ek bhi mistake karenge to ho sakta hai ke aapka poora site de-index ho jaye.

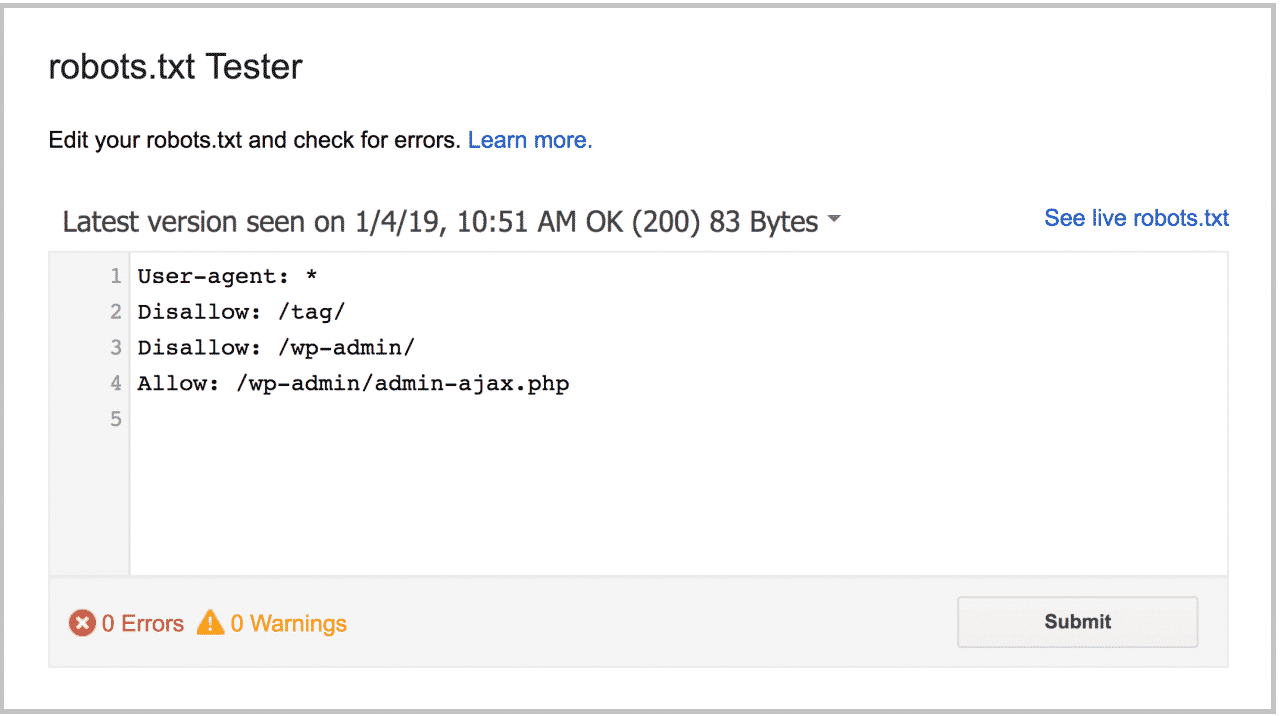

Fortunately, aapko is baat ke liye pareshan hone ki zaroorat nahi ai ke aapka code sahi set up hua hai, kyuki Google ke nifty Robots Testing Tool ka use aap kar sakte hai.

Ye aapko aapke robots.txt file ko show karega aur agar aapke file me koi errors aur warnings honge to unhe bhi show karega.

Upar diye gaye robots.txt file ke example me aap dekh sakte hai ke humne WP admin page ko spider ko crawl karne se block kiya hai.

Aap robots.txt ki help se WordPress auto-generated tag pages ko bhi spider ko crawl karne se block kar sakte hai.

Robots.txt vs. Meta Directives

Yaha pe ek baat shayad aapko confuse kar rahi hogi ke aap robots.txt ka use kyu kare jub aap pages ko page-level “noindex” meta tag ki help se block kar sakte hai.

Humne aapko pahle hi bataya hai ke noindex tag ko multimedia resources, jaise videos aur PDFs ke liye use karna tricky hai.

Aur iske sath hi agar aapko hazaro pages ko block karna hai to aapke liye site ke entire section ko robots.txt se block karna iske comparison me aasan hai ke aap har ek single page me noindex tag ka use kare.

In sab ke bawajood hamari ray hai ke aap apne pages pe robots.txt ke badle meta directives ka hi use kare, kyu ki ye aapko aapke site pe ghalti se hone wale kisi bhi ade nuksan se bacha sakta hai.

Ummid hai aapko roots.txt file ke introduction ka ye article (Robots.txt kya hai aur Robots.txt kyu important hai) informative lagi hoaa. Agar aapka koi swaaal hai to aap humse comment section me pooch sakte hain. Apke liye ye (SEO in Hindi ) course design kiya gya hai, Is Website pe apko WordPress aur SEO Complete Course Hindi me mil jyega, Agr aap WordPress se related videos dekhna chahte hain to aap hmare WP Seekho YouTube Channel par bhi visit kar sakte hain.